Containerization of AIs

Note: This section requires familiarity with the concepts of containerization and REST API.

To ensure safety, flexibility, retrocompatibility and independency, we store and run AIs using Bento ML containerization framework.

When a model is saved, it uses the latest schema version from bento.ai_type.schemas.py which specifies how the service

communicates with the Server. After that, a model becomes part of a Bento archive.

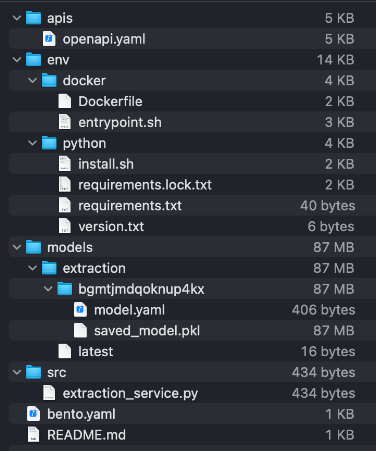

Inside the Bento archive, there are a lockfile with dependencies, the Python runtime version, the pickled model itself, a Dockerfile and a Python file that serves a REST API for the model. It also specifies the schema version for this model.

To save a model as a Bento instance, use save_bento() method of an AI class that you want to save, for instance:

from konfuzio_sdk.trainer.information_extraction import RFExtractionAI

model = RFExtractionAI()

model.save_bento()

Running this command will result in a Bento creation in your local Bento storage. If you wish to specify an output for a Bento archive, you can do this via the following command:

model.save_bento(output_dir='path/to/your/bento/archive.bento')

The resulting Bento archive can be uploaded as a custom AI to the Server or an on-prem installation of Konfuzio.

If you want to test that your Bento instance of a model runs, you can serve it locally using the next command:

bentoml serve name:version # for example, extraction_11:2qytjiwhoc7flhbp

After that, you can check the Swagger for the Bento on 0.0.0.0:3000 and send requests to the available endpoint(s).

To run a Bento instance as a container and test it, use a following command:

bentoml containerize name:version # for example, extraction_11:2qytjiwhoc7flhbp

Document processing with a containerized AI

This section describes what steps every Document goes through when processed by a containerized AI. This can be helpful if you want to create your own containerized AI - the sample conversion steps can be used as blueprint for your own pipeline.

The first step is always the same: transforming a Document into a JSON that adheres to a predefined Pydantic schema. This is needed to send the data to the container with the AI because it’s not possible to send a Document object directly.

AI type |

Processing step |

Conversion function |

Input |

Output |

|---|---|---|---|---|

Categorization |

Convert a Document object into a JSON request |

|

Document |

JSON structured as the latest request schema |

Categorization |

Recreate a Document object inside the container |

|

JSON |

Document |

Categorization |

Convert page-level category information into a JSON to return from a container |

|

Categorized pages |

JSON structured as the latest response schema |

Categorization |

Update page-level category data in an original Document |

|

JSON |

Updated Document |

File Splitting |

Convert a Document object into a JSON request |

|

Document |

JSON structured as the latest request schema |

File Splitting |

Recreate a Document object inside the container |

|

JSON |

Document |

File Splitting |

Convert splitting results into a JSON to return from a container |

|

List of Documents |

JSON structured as the latest response schema |

File Splitting |

Create new Documents based on splitting results |

None |

JSON |

New Documents |

Information Extraction |

Convert a Document object into a JSON request |

|

Document |

JSON structured as the latest request schema |

Information Extraction |

Recreate a Document object inside the container |

|

JSON |

Document |

Information Extraction |

Convert info about extracted Annotations into a JSON to return from a container |

|

Annotation and Annotation Set data |

JSON structured as the latest response schema |

Information Extraction |

Reconstruct a Document and add new Annotations into it |

|

JSON |

Document |

The communication between Konfuzio Server (or anywhere else where the AI is served) and a containerized AI can be described as the following scheme:

If you want to containerize a custom AI, refer to the documentation on how to create and save a custom Extraction AI, a custom File Splitting AI, or a custom Categorization AI, respectively.

To learn more about the Bento containerization and how to configure your instance of Konfuzio to support them, refer to Dockerized AIs.