Installation Guide

On-premises, also known as self-hosted, is a setup that allows Konfuzio Server to be implemented 100% on your own infrastructure or any cloud of your choice. In practice, it means that you know where your data is stored, how it’s handled and who gets hold of it.

A common way to operate a production-ready and scalabe Konfuzio installation is via Kubernetens. An alternative deployment option is the Single VM setup via Docker. We recommend to use the option which is more familiar to you. In general

On-Premise Konfuzio installations allow to create Superuser accounts which can access all Documents, Projects and AIs via a dedicated view as well as creating custom Roles

Konfuzio Server has beens successfully installed on various clouds.

Here you find a few examples:

Amazon Web Services (AWS)

Microsoft Azure

Google Cloud Platform (GCP)

IBM Cloud

Oracle Cloud Infrastructure (OCI)

Alibaba Cloud

VMware Cloud

DigitalOcean

Rackspace

Salesforce Cloud

OVH Cloud

Hetzner

Telekom Cloud

Billing and License

When you purchase a Konfuzio Server self-hosted license online, we provide you with the necessary credentials to download the Konfuzio Docker Images. If you deploy via Kubernetes you can find the Helm Charts already here. An essential part of this process is the unique BILLING_API_KEY. This key should be set as an environment variable when starting the Konfuzio Server using the following information.

Setup Billing API

The BILLING_API_KEY needs to be passed as environment variable to the running Docker container.

This is inline with the fact that all configuration of Konfuzio is done via environment variables.

Here is an example command to illustrate the setting of the BILLING_API_KEY environment variable:

docker run -e BILLING_API_KEY=your_api_key_here -d your_docker_image

In the command above, replace your_api_key_here with the actual billing API key and your_docker_image with the name of your Docker image.

Here’s what each part of the command does:

docker run: This is the command to start a new Docker container.-e: This option allows you to set environment variables. You can also use “–env” instead of “-e”.BILLING_API_KEY=your_api_key_here: This sets the “BILLING_API_KEY” environment variable to your actual API key.-d: This option starts the Docker container in detached mode, which means it runs in the background.your_docker_image: This is the name of your Docker image. You replace this with the actual name of your Docker image.

Instead of using “plain” Docker we recommend to use our Helm Chart or Docker-Compose to install Konfuzio Server.

For Docker-Compose you can simply replace the BILLING_API_KEY placeholder in the docker-compose.yaml file.

When using Helm on Kubernetes, the BILLING_API_KEY is set as ‘envs.BILLING_API_KEY’ in the values.yaml file.

The Konfuzio container continues to report usage to our billing server, i.e., app.konfuzio.com, once a day. We assure you that the containers do not transmit any customer data, such as the image or text that’s being analyzed, to the billing server.

Important Updates

If the unique key associated with the Contract on app.konfuzio.com is removed, your self-hosted installation will cease to function within 24 hours. Currently, there is no limit on the number of pages that can be processed within this time. The Konfuzio Server will also refuse to start if any Python file within the container has been modified to preserve the integrity of our license checks.

Technical Background

Our server restarts itself after 500 requests by default. The code that prevents changes to Python files in the container has been obfuscated to ensure a significant challenge for anyone attempting to modify the source code.

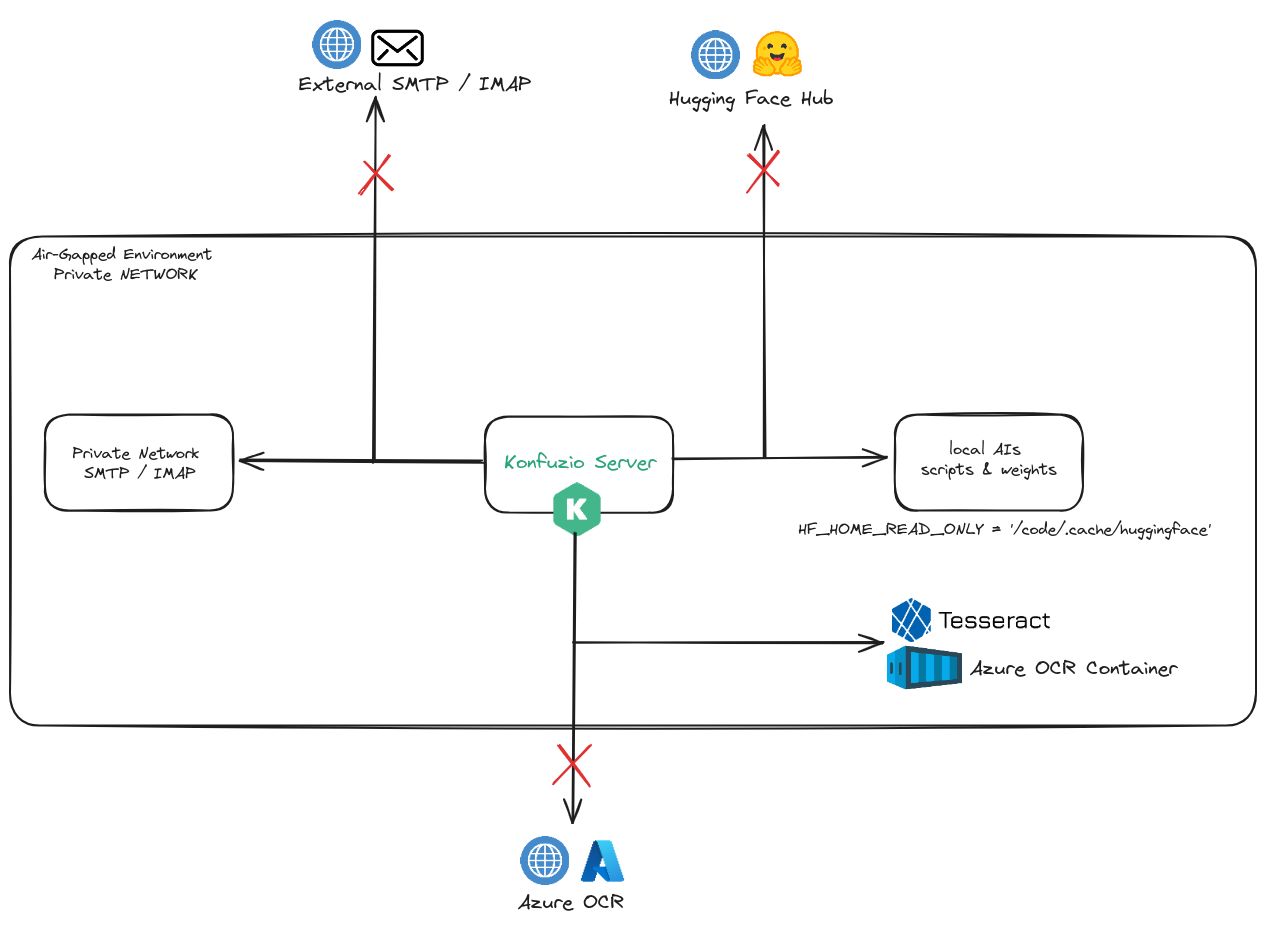

Air-gapped Environments and Upcoming Changes

For those operating the Konfuzio Server in air-gapped environments, your Konfuzio Docker images are licensed to operate for one year, based on the release date, without needing to connect to our billing server.

Soon, however, we will be introducing changes to our license check mechanism. The entire license check will be deactivated if a BILLING_API_KEY is not set. We are currently notifying self-hosted users about this upcoming change, urging them to configure their billing credentials. For more information, please refer to the Billing and License section in our documentation.

For users requiring further instructions, especially those operating in air-gapped environments, please contact us for assistance.

We appreciate your understanding and cooperation as we continue to enhance our security, licensing, and billing measures for all self-hosted installations of the Konfuzio Server.

Kubernetes

Required tools

Before deploying Konfuzio to your Kubernetes cluster, there are some tools you must have installed locally.

kubectl

kubectl is the tool that talks to the Kubernetes API. kubectl 1.15 or higher is required and it needs to be compatible with your cluster (+/-1 minor release from your cluster).

> Install kubectl locally by following the Kubernetes documentation.

The server version of kubectl cannot be obtained until we connect to a cluster. Proceed with setting up Helm.

Helm

Helm is the package manager for Kubernetes. Konfuzio is tested and supported with Helm v3.

Getting Helm

You can get Helm from the project’s releases page, or follow other options under the official documentation of installing Helm.

Connect to a local Minikube cluster

For test purposes you can use minikube as your local cluster. If kubectl cluster-info

is not showing minikube as the current cluster, use kubectl config set-cluster

minikube to set the active cluster. For clusters in production please visit the Kubernetes

Documentation.

Initializing Helm

If Helm v3 is being used, there no longer is an init sub command and the command is

ready to be used once it is installed. Otherwise please upgrade Helm.

Next steps

Once kubectl and Helm are configured, you can continue to configuring your Kubernetes cluster.

Deployment

Before running helm install, you need to make some decisions about how you will run

Konfuzio. Options can be specified using Helm’s --set option.name=value or --values=my_values.yaml command

line option. A complete list of command line options can be found here. This guide will

cover required values and common options.

Create a values.yaml file for your Konfuzio configuration. See Helm docs for information on how your values file will override the defaults. Useful default values can be found in the values.yaml in the chart repository.

Selecting configuration options

In each section collect the options that will be combined to use with helm install.

Secrets

There are some secrets that need to be created (e.g. SSH keys). By default they will be generated automatically.

Networking and DNS

By default, Konfuzio relies on Kubernetes Service objects of type: LoadBalancer to

expose Konfuzio services using name-based virtual servers configured with Ingress

objects. You’ll need to specify a domain which will contain records to resolve the

domain to the appropriate IP.

--set ingress.enabled=True

--set ingress.HOST_NAME=konfuzio.example.com

Persistence

By default the setup will create Volume Claims with the expectation that a dynamic provisioner will create the underlying Persistent Volumes. If you would like to customize the storageClass or manually create and assign volumes,please review the storage documentation.

Important : After initial installation, making changes to your storage settings requires manually editing Kubernetes objects, so it’s best to plan ahead before installing your production instance of Konfuzio to avoid extra storage migration work.

TLS certificates

You should be running Konfuzio using https which requires TLS certificates. To get automated certificates using letesencrypt you need to install cert-manager in your cluster. If you have your own wildcard certificate, you already have cert-manager installed, or you have some other way of obtaining TLS certificates. For the default configuration, you must specify an email address to register your TLS certificates.

Include these options in your Helm install command:

--set letsencrypt.enabled=True

--set letsencrypt.email=me@example.com

PostgreSQL

By default this Konfuzio provides an in-cluster PostgreSQL database, for trial purposes only.

Note

Unless you are an expert in managing a PostgreSQL database within a cluster, we do not recommended this configuration for use in production.**

A single, non-resilient Deployment is used

You can read more about setting up your production-readydatabase in the PostgreSQL documentation. As soon you have an external PostgreSQL database ready, Konfuzio can be configured to use it as shown below.

Include these options in your Helm install command:

--set postgresql.install=false

--set global.psql.host=production.postgress.hostname.local

--set global.psql.password.secret=kubernetes_secret_name

--set global.psql.password.key=key_that_contains_postgres_password

Redis

All the Redis configuration settings are configured automatically.

Persistent Volume

Konfuzio relies on object storage for highly-available persistent data in Kubernetes. By default, Konfuzio uses a persistent volume within the cluster.

CPU, GPU and RAM Resource Requirements

The resource requests, and number of replicas for the Konfuzio components in this setup are set by default to be adequate for a small production deployment. This is intended to fit in a cluster with at least 8 vCPU with AVX2 support enabled, 32 GB of RAM and one Nvidia GPU with minimum 4GB which supports at least CUDA10.1 and CUDNN 7.0. If you are trying to deploy a non-production instance, you can reduce the defaults in order to fit into a smaller cluster. Konfuzio can work without a GPU. The GPU is used to train and run Categorization AIs. We observe a 5x faster training and a 2x faster execution on GPU compared to CPU.

Storage Requirements

This section outlines the initial storage requirements for the on-premises installation. It is important to take these requirements into consideration when setting up your server, as the amount of storage needed may depend on the number of documents being processed.

For testing purposes, a minimum of 10 GB is required per server (not per instance of a worker).

For serious use, a minimum of 100 GB should be directly available to the application. This amount should also cover the following:

Postgres, which typically uses 10% of this size.

Docker image storage, up to 25 GB should be reserved for upgrades.

Each page thumbnail adds 1-2 KB to the file size.

After uploading, the total file size of a page image and its thumbnails increases by approximately a factor of 3 (10 MB becomes approximately 30 MB on the server).

To reduce storage usage, it is recommended to disable sandwich file generation by setting

ALWAYS_GENERATE_SANDWICH_PDF=False.

Deploy using Helm

Once you have all of your configuration options collected, we can get any dependencies

and run Helm. In this example, we’ve named our Helm release konfuzio.

helm repo add konfuzio-repo https://git.konfuzio.com/api/v4/projects/106/packages/helm/stable

helm repo update

helm upgrade --install konfuzio konfuzio-repo/konfuzio-chart --values my_values.yaml

Please create a my_values.yaml file for your Konfuzio configuration. Useful default values can be found in the values.yaml in the chart repository. See Helm docs for information on how your values file will override the defaults. Alternativ you can specify you configuration using --set option.name=value.

Monitoring the Deployment

The status of the deployment can be checked by running helm status konfuzio which

can also be done while the deployment is taking place if you run the command in

another terminal.

Autoscaling

The Konfuzio Server deployments can be scaled dynamically using a Horizontal Pod Autoscaler and a Cluster Autoscaler. The autoscaling configuration for the Konfuzio Server installation of https://app.konfuzio.com can be found in this Helm Chart.

Initial login

You can access the Konfuzio instance by visiting the domain specified during installation. In order to create an initial superuser, please to connect to a running pod.

kubectl get pod

kubectl exec --stdin --tty my-konfuzio-* -- bash

python manage.py createsuperuser

Quick Start via Kubernetes and Helm

The following commands allow you to get a Konfuzio Server installation running with minimal configuration effort and relying on the default values of the Chart. This uses Postgres, Redis and S3 via MinIO as in-cluster deployments. This setup is not suited for production and may use insecure defaults.

helm repo add konfuzio-repo https://git.konfuzio.com/api/v4/projects/106/packages/helm/stable

helm repo update

helm install my-konfuzio konfuzio-repo/konfuzio-chart

--set envs.HOST_NAME="host-name-for-you-installation.com" \

--set envs.BILLING_API_KEY="******"

--set image.tag="released-******"

--set image.imageCredentials.username="******" \

--set image.imageCredentials.password="******"

Upgrade

Before upgrading your Konfuzio installation, you need to check the changelog corresponding to the specific release you want to upgrade to and look for any that might pertain to the new version.

We also recommend that you take a backup first.

Upgrade Konfuzio following our standard procedure,with the following additions of:

Check the change log for the specific version you would like to upgrade to

Ensure that you have created a database backup in the previous step. Without a backup, Konfuzio data might be lost if the upgrade fails. 3a. If you use a values.yaml, update image.tag=”released-**“ to the desired Konfuzio Server version.

helm install --upgrade my-konfuzio konfuzio-repo/konfuzio-chart -f values.yaml

3b. If you use “–set”, you can directly set the desired Konfuzio Server version.

helm install --upgrade my-konfuzio konfuzio-repo/konfuzio-chart --reuse-values --set image.tag="released-******"

We will perform the migrations for the Database for PostgreSQL automatically.

Docker - Single VM setup

Konfuzio can be configured to run on a single virtual machine, without relying on Kubernetes. In this scenario, all necessary containers are started manually or with a container orchestration tool of your choice.

VM Requirements

We recommend a virtual machine with a minimum of 8 vCPU (incl. AVX2 support) and 32 GB of RAM and an installed Docker runtime. A Nvidia GPU is recommended but not required. In this setup Konfuzio is running in the context of the Docker executor, therefore there are no strict requirements for the VMs operating systems. However, we recommend a Linux VM with Debian, Ubuntu, CentOS,or Redhat Linux.

Login to the Konfuzio Docker Image Registry

We will provide you with the credentials to login into our Docker Image Registry, which allows you to access and download our Docker Images. This action requires an internet connection.

The internet connection can be turned off once the download is complete. In case there is no internet connection available during setup, the container must be transferred with an alternative method as a file to the virtual machine.

Registry URL: {PROVIDED_BY_KONFUZIO}

Username: {PROVIDED_BY_KONFUZIO}

Password: {PROVIDED_BY_KONFUZIO}

docker login REGISTRY_URL

The Tag “latest” should be replaced with an actual version. A list of available tags can be found here: https://dev.konfuzio.com/web/changelog_app.html.

Quick Start via Docker-Compose

The fastest method for deploying Konfuzio Server using Docker is through Docker-Compose. To get started, follow these steps:

Install Docker-Compose in a version that is compatible with Compose file format 3.9.

Run

docker compose versionto verify that Docker-Compose is available.Download the docker-compose.yml file.

Fill in the mandatory variables in the first section of the docker-compose file.

Launch Konfuzio Server by running

docker compose up -d.

If you prefer to use Docker exclusively, we provide detailed instructions for setting up the containers in each necessary step (Step 1-9).

1. Download Docker Image

After you have connected to the Registry, the Konfuzio docker image can be downloaded via “docker pull”.

docker pull REGISTRY_URL/konfuzio/text-annotation/master:latest

The Tag “latest” should be replaced with an actual version. A list of available tags can be found here: https://dev.konfuzio.com/web/changelog_app.html.

2. Setup PostgreSQL, Redis, BlobStorage/FileSystemStorage

The databases and credentials to access them are created in this step. Please choose your selected databases at this point. You need to be able to connect to your PostgreSQL via psql and to your Redis server via redis-cli before continuing the installation.

In case you use FileSystemStorage and Docker volume mounts, you need to make sure the volume can be accessed by the konfuzio docker user (uid=999). You might want to run chown 999:999 -R /konfuzio-vm/text-annotation/data on the host VM.

The PostgreSQL database connection can be verified via psql and a connection string in the following format.

This connection string is later set as DATABASE_URL.

psql -H postgres://USER:PASSWORD@HOST:PORT/NAME

The Redis connection can be verified using redis-cli and a connection string.

To use the connection string for BROKER_URL, RESULT_BACKEND and DEFENDER_REDIS_URL you need to append the database selector (e.g. redis://default:PASSWORD@HOST:PORT/0)

redis-cli -u redis://default:PASSWORD@HOST:PORT

3. Setup the environment variable file

Copy the /code/.env.example file from the container and adapt it to your settings. The .env file can be saved anywhere on the host VM. In this example we use “/konfuzio-vm/text-annotation.env”.

4. Init the database, create first superuser via cli and prefill e-mail templates

In this example we store the files on the host VM and mount the directory “/konfuzio-vm/text-annotation/data” into the container. In the first step we create a container with a shell to then start the initialization scripts within the container. The container needs to be able to access IP addresses and hostnames used in the .env. This can be ensured using –add.host. In the example we make the host IP 10.0.0.1 available.

docker run -it --add-host=10.0.0.1 \

--env-file /konfuzio-vm/text-annotation.env \

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data \

REGISTRY_URL/konfuzio/text-annotation/master:latest bash

python manage.py migrate

python manage.py createsuperuser

python manage.py init_email_templates

python manage.py init_user_permissions

After completing these steps you can exit and remove the container.

Note

The username used during the createsuperuser dialog must have the format of a valid e-mail in order to be able to login later.

Note

The default email templates can be customized by navigating to your on-prem installation in the section “Email Templates”. [Section 11a](/web/on_premises.html#a-upgrade-to-newer-konfuzio-version), explains how these templates can be updated.

5. Start the container

In this example we start four containers. The first one to serve the Konfuzio web application.

docker run -p 80:8000 --name web -d --add-host=host:10.0.0.1 \

--env-file /konfuzio-vm/text-annotation.env \

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data \

REGISTRY_URL/konfuzio/text-annotation/master:latest

The second and third are used to process tasks in the background without blocking the web application. Depending on our load scenario, you might to start a large number of worker containers.

docker run --name worker1 -d --add-host=host:10.0.0.1

--env-file /konfuzio-vm/text-annotation.env

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data

REGISTRY_URL/konfuzio/text-annotation/master:latest

celery -A app worker -l INFO --concurrency 1 -Q celery,priority_ocr,ocr,

priority_extract,extract,processing,priority_local_ocr,local_ocr,\

training,finalize,training_heavy,categorize

docker run --name worker2 -d --add-host=host:10.0.0.1 \

--env-file /konfuzio-vm/text-annotation.env

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data \

REGISTRY_URL/konfuzio/text-annotation/master:latest \

celery -A app worker -l INFO --concurrency 1 -Q celery,priority_ocr,ocr,\

priority_extract,extract,processing,priority_local_ocr,local_ocr,\

training,finalize,training_heavy,categorize

The fourth container is a Beats-Worker that takes care of sceduled tasks (e.g. auto-deleted documents).

docker run --name beats -d --add-host=host:10.0.0.1

--env-file /konfuzio-vm/text-annotation.env

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data

REGISTRY_URL/konfuzio/text-annotation/master:latest

celery -A app beat -l INFO -s /tmp/celerybeat-schedule

[Optional] 6. Use Flower to monitor tasks

Flower can be used a task monitoring tool. Flower will be only accessible for Konfuzio superusers and is part of the Konfuzio Server Docker Image.

docker run --name flower -d --add-host=host:10.0.0.1

--env-file /konfuzio-vm/text-annotation.env

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data \

REGISTRY_URL/konfuzio/text-annotation/master:latest

celery -A app flower --url_prefix=flower --address=0.0.0.0 --port=5555

The Konfuzio Server application functions as a reverse proxy and serves the Flower application. In order for Django to correctly access the Flower application, it requires knowledge of the Flower URL. Specifically, the FLOWER_URL should be set to http://host:5555/flower.

FLOWER_URL=http://host:5555/flower

Please ensure that the Flower container is not exposed externally, as it does not handle authentication and authorization itself.

[Optional] 7. Run Container for Email Integration

The ability to upload documents via email can be achieved by starting a dedicated container with the respective environment variables.

SCAN_EMAIL_HOST = imap.example.com

SCAN_EMAIL_HOST_USER = user@example.com

SCAN_EMAIL_RECIPIENT = automation@example.com

SCAN_EMAIL_HOST_PASSWORD = xxxxxxxxxxxxxxxxxx

docker run --name flower -d --add-host=host:10.0.0.1

--env-file /konfuzio-vm/text-annotation.env

--mount type=bind,source=/konfuzio-vm/text-annotation/data,target=/data \

REGISTRY_URL/konfuzio/text-annotation/master:latest

python manage.py scan_email

[Optional] 8. Use Azure Read API (On-Premises or as Service)

The Konfuzio Server can work together with the Azure Read API. There are two options to use the Azure Read API in an on-premises setup.

Use the Azure Read API as a service from the public Azure cloud.

Install the Azure Read API container directly on your on-premises infrastructure via Docker.

The Azure Read API is in both cases connected to the Konfuzio Server via the following environment variables.

AZURE_OCR_KEY=123456789 # The Azure OCR API key

AZURE_OCR_BASE_URL=http://host:5000 # The URL of the READ API

AZURE_OCR_VERSION=v3.2 # The version of the READ API

For the first option, login into the Azure Portal and create a Computer Vision resource under the Cognitive Services section. After the resource is created the AZURE_OCR_KEY and AZURE_OCR_BASE_URL is displayed. Those need to be added as environment variable.

For the “on-premises” option, please refer to the Azure Read API Container installation guide. Please create a Computer Vision service to get an API_KEY and ENDPOINT_URI which is compatible with running the On-Premises container. The ENDPOINT_URL must be reachable by the Azure Read API Container. After the resource is created, store ENDPOINT_URI and API_KEY as environment variables and follow the steps in the installation guide.

How to fix common Azure Read API issues for On-Premises

1. Ensure AVX2 Compatibility:

Verify if your system supports Advanced Vector Extensions 2 (AVX2). You can do this by running the following command on Linux hosts:

grep -q avx2 /proc/cpuinfo && echo AVX2 supported || echo No AVX2 support detected

For further information, refer to Microsoft’s AVX2 support guide.

2. Verify Azure Read Container Status:

You can monitor the Azure Read Container’s status through its web interface. To do this, access the container on Port 5000 (i.e. http://localhost:5000/status) from a web browser connected to the Container’s network. You might need to replace ‘localhost’ with the IP/network name of the Azure container. This interface will also help detect issues such as invalid credentials or an inaccessible license server (possibly due to a firewall). Refer to Microsoft’s guide on validating container status for further assistance.

3. Consider Trying a Different Container Tag:

If your Azure Read API Container ceases to function after a restart, it may be due to an automatic upgrade to a new, potentially faulty Docker tag. In such cases, consider switching to a previous Docker tag. You can view the complete version history of Docker tags at the Microsoft Artifact Registry. Remember to uncheck the “Supported Tags Only” box to access all available versions.

9a. Upgrade to newer Konfuzio Version

To update Konfuzio to the latest released version, check the timestamped name of the latest release here: https://dev.konfuzio.com/web/changelog_app.html

SSH into the server that runs the Konfuzio Docker container

Open the

docker-compose.ymlfile for editing, and search for the line:image: git.konfuzio.com:5050/konfuzio/text-annotation/master:released-<timestamp>

Replace

released-<timestamp>with the latest release you want to update to. For example, if the latest release happened on November 15, you will have something likereleased-2023-11-15_09-39-24, so you will change the image path to:image: git.konfuzio.com:5050/konfuzio/text-annotation/master:released-2023-11-15_09-39-24

Run the following to have Docker pull the new image and rebuild the container:

docker compose up

Once the command completed successfully the server update is complete.

Updating existing email templates

During Konfuzio upgrades it is possible that we release some updates to the installed email-templates. It is important

to note, that these email templates, do not override any existing email templates. This ensures, that you can make

your own changes, without the risk of losing them during each upgrade. If you do want to update and sync your email

templates with each Konfuzio update. You can delete all the current email templates, or a single email template which needs

updating, and then running python manage.py init_email_templates (see 4). This action will then set each template to

its default template value.

9b. Downgrade to older Konfuzio Version

Konfuzio downgrades are performed by creating a fresh Konfuzio installation in which existing Projects can be imported. The following steps need to be undertaken:

Export the Projects that you want to have available after downgrade using

Project.export_project_data()from konfuzio_sdk. Please make sure you use a SDK version that is compatible with the Konfuzio Server version you want to migrate to.Create a new Postgres Database and a new Folder/Bucket for file storage which will be used for the downgraded version

Install the desired Konfuzio Server version by starting with 1.)

Import the projects using

python manage.py project_import

Load Scenario for Single VM with 32GB

Scenario 1: With self-hosted OCR

Number of Container |

Container Type |

RAM |

Capacity |

|---|---|---|---|

1 |

Web Container |

4GB |

… |

3 |

Generic Celery Worker |

4GB |

1500 (3 x 500) Pages of Extraction, Categorization, or Splitting per hour |

1 |

Self-Hosted OCR worker |

8GB |

1200 (1 y 1200) Pages) / hours (Not needed if external API Service is used) |

N |

remaining Containers |

4GB |

… |

With this setup, around 1200 Pages per hour can be processed using OCR and Extraction, around 750 Pages per hour can be processed if OCR, Categorization and Extraction are active, around 500 Pages per hour can be processed if OCR, Splitting, Categorization and Extraction are active.

Scenario 2: Without self-hosted OCR

Number of Container |

Container Type |

RAM |

Capacity |

|---|---|---|---|

1 |

Web Container |

4GB |

… |

5 |

Generic Celery Worker |

4GB |

2500 (5 x 500) Pages of Extraction, Categorization, or Splitting per hour |

N |

remaining Containers |

4GB |

… |

With this setup, around 2500 Pages per hour can be processed using OCR and Extraction, around 1250 Pages per hour can be processed if OCR, Categorization and Extraction are active, around 800 Pages per hour can be processed if OCR, Splitting, Categorization and Extraction are active.

Note

In case you train large AI Models (>100 Training Pages) more than 4GB for Generic Celery Workers are needed. The Benchmark used an Extraction AI with “word” detection mode and 10 Labels in 1 Label Set. The capacity is shared between all Users using the Konfuzio Server setup.

The level of parallelity of task processing and therefore throughput can be increased by the number of running “Generic Celery Workers”.

The performance of any system that processes documents can be affected by various factors, including the number of simultaneous connections, the size of the documents being processed, and the available resources such as RAM and processing power.

The number of simultaneous connections can also impact performance. If multiple users are uploading and processing large documents simultaneously, it can put a strain on the system’s resources, leading to slower processing times.

Other factors that can affect performance include network latency, disk speed, and the complexity of the processing algorithms. It’s essential to consider all these factors when designing and deploying document processing systems to ensure optimal performance.

Docker-Compose vs. Kubernetes

When it comes to running the Konfuzio Server, the choice between Docker Compose and Kubernetes will depend on your specific requirements and use case.

Docker Compose can be a good choice if you are running Konfuzio Server on a single host in production or for testing and development purposes. With Docker Compose, you can define and customize the services required for the Konfuzio Server in a YAML file we provide, and then use a single command to start all the containers.

On the other hand, Kubernetes is more suitable for production environments where you need to run Konfuzio Server at scale, across multiple hosts, and with features such as auto-scaling and self-healing. Kubernetes has a steep learning curve, but it provides advanced features for managing and scaling containerized applications.

Regarding the use of Docker Compose in multiple VMs, while it’s possible to use Docker Compose in a distributed environment, managing multiple VMs can be more work than using a dedicated orchestration platform like Kubernetes. Kubernetes provides built-in support for managing distributed systems and is designed to handle the complexities of running containers at scale.

In either case, you can use the same Docker image for the Konfuzio Server, which will ensure consistency and portability across different environments. Overall, the choice between Docker Compose and Kubernetes will depend on your specific needs, level of expertise, and infrastructure requirements.

Custom AI model training via CI pipelines

Konfuzio is a platform that provides users with the ability to run custom AI workflows securely through its Continuous Integration (CI) pipelines. These pipelines enable users to run their code automatically and continuously, learning from human feedback on any errors or issues as soon as they occur.

In situations where the Kubernetes deployment option is not utilized, Konfuzio recommends using a dedicated virtual machine to run these pipelines. This ensures that the pipelines are isolated from other processes and are therefore less susceptible to interference or interruption.

To effectively run these CI pipelines, the selected CI application must support Docker and webhooks. Docker allows for the creation and management of containers, which are used to package and distribute the code to be tested. Webhooks, on the other hand, provide a way for the CI application to trigger the pipeline automatically.

Furthermore, the CI application needs to have network access to the Konfuzio installation. This is necessary to enable the CI application to interact with the Konfuzio platform, ensuring that the CI pipelines are able to access the necessary resources and dependencies.

Overall, Konfuzio’s use of CI pipelines provides a powerful tool for advanced users to run their AI workflows securely, with the added benefit of automated testing and continuous feedback.

SSO via OpenID Connect (OIDC)

Konfuzio utilizes OpenID Connect (OIDC) for identity verification, implementing this through Mozilla’s Django OIDC. OIDC is a well-established layer built atop the OAuth 2.0 protocol, enabling client applications to confirm the identity of end users. Numerous Identity Providers (IdP) exist that support the OIDC protocol, thus enabling the implementation of Single Sign-On (SSO) within your application. A commonly chosen provider is Keycloak. In the subsequent section, you will find a tutorial on how to use Keycloak with OIDC.

SSO Keycloak Integration

With Keycloak, you can implement the following workflows in Konfuzio:

Use the Keycloak server to authenticate users with Konfuzio, automatically creating users in Konfuzio if they don’t exist yet.

Disable password login in Konfuzio, forcing users to use Keycloak to log in.

Synchronize groups assigned to users in Keycloak with groups in Konfuzio.

Use Keycloak to generate tokens that can be used to access the Konfuzio REST API.

Enabling the first workflow (authenticating Keycloak users with Konfuzio) is mandatory to support the other ones.

Set up

To start and set up Keycloak server:

Download the Keycloak server.

Install and start Keycloak server using instructions.

Open the Keycloak dashboard in browser (locally it’s http://0.0.0.0:8080/).

Create the admin user.

Login to the Administration Console.

Create a new Realm or use the existing one (Master).

At this point you need to create a new Keycloak client to integrate it with Konfuzio.

Create a new Keycloak client of type OpenID Connect. Give it any name.

When prompted for client authentication or access type (in older version) choose On or confidential.

Make sure that the direct access grants option is enabled.

Make sure that the web origins URL list includes the URL where requests to Keycloak are coming from (i.e. your Konfuzio installation URL).

Move to the

Credentialstab and save theSecretvalue. Store it somewhere as it will be needed later.

You can now create your users and groups from the relevant sections in the Keycloak dashboard.

Use the Keycloak server to authenticate users with Konfuzio

After the setup, you need to set the following environment variables for you Konfuzio Server installation:

KEYCLOAK_URL(http://127.0.0.1:8080/ - for localhost)OIDC_RP_SIGN_ALGO(RS256 - by default)OIDC_RP_CLIENT_ID(client name the setup)OIDC_RP_CLIENT_SECRET(secret value from the setup)SSO_ENABLED(set toTrueto activate the integration)

After a restart, you should see an SSO button on the login page. Clicking it will redirect you to the Keycloak login page. After a successful login, you will be redirected back to Konfuzio and logged in.

Disable password login in Konfuzio

Set the PASSWORD_LOGIN_ENABLED environment variable to False on your Konfuzio installation. This will remove the password login form from the login page, forcing users to use Keycloak to log in. In addition, this deactivates the password-based user signup form.

Synchronize groups assigned to users in Keycloak with groups in Konfuzio

Konfuzio can synchronize groups with Keycloak. This means that if you create a group in Keycloak and assign users to it, those users will be automatically added to an already existing group with the same name in Konfuzio. This is done by creating a group mapper in Keycloak:

Select the client you created earlier.

Go to the Client scopes tab inside the client.

You should see a scope which is the name of the client followed by

-dedicated. Click on it.Click Configure a new mapper.

Choose Group Membership.

In the settings for the mapper,

Set the Name to

groups(lowercase).Set the Token Claim Name to

groups(same as above).Disable Full group path.

Save.

You also need to set the SYNCHRONIZE_KEYCLOAK_GROUPS_WITH_KONFUZIO_GROUPS environment variable to True on your Konfuzio installation. For additional security, you can also set OIDC_RENEW_ID_TOKEN_EXPIRY_SECONDS (default: 15 minutes) to a lower value, so permissions are checked from the backend in shorter intervals (see documentation).

Now when assigning users to groups in Keycloak, the same groups will be created in Konfuzio (if they don’t exist already) and the users will be added to them. Note that you still need to add permissions to the groups in Konfuzio for them to have any effect. You can automate this process with a CLI command, run python manage.py import_groups_and_permissions --help for more information.

An exception to this is a group named with the value of the environment variable KEYCLOAK_SUPERUSER_GROUP_NAME (default: Superuser) on Keycloak. This group will map to the superuser status on Konfuzio, which gives access to everything on the instance. If you don’t create a group named with the value of KEYCLOAK_SUPERUSER_GROUP_NAME on Keycloak, this will not be used.

Use Keycloak to generate tokens for the Konfuzio REST API

By default, the Konfuzio REST API requires token authentication, which is only possible with regular username and password. However, your Keycloak server can be configured to directly generate tokens that are compatible with the REST API.

Set up the Keycloak client

You can reuse the client you’ve already created in the original client setup. However, by choosing the default client authentication: on option, users will also need to include the secret you’ve created to authenticate with the Keycloak server. This is not ideal for the REST API, so you probably want to create a new client with the client authentication: off/public option. If you’re using groups synchronization, remember to add the groups mapper to the new client as well.

Get a token from Keycloak

Once you have your client setup, you can test the token generation. Create a request similar to this:

curl --request POST \

--url https://sso.konfuzio.com/realms/myrealm/protocol/openid-connect/token \

--header 'Content-Type: application/x-www-form-urlencoded' \

--data username=myaccount@konfuzio.com \

--data password=mypassword \

--data client_id=myclient \

--data client_secret=mysecret \

--data grant_type=password \

--data scope=openid

Change the variables accordingly:

sso.konfuzio.comshould be replaced with your Keycloak installation URL.myrealmshould be replaced with the name of your Keycloak realm.The

usernamefield should contain the username of the Keycloak user.The

passwordfield should contain the password of the Keycloak user.The

client_idfield should contain the name of the client you created earlier.The

client_secretfield is only necessary if you configured your client to be “confidential”. If so, it should contain the client secret as shown in the Credentials tab of the Keycloak client.

The request will return an access_token token field that you can use in the next step. Depending on your client setup, it will also include a refresh_token and various fields that determine how long the generated token(s) are valid (see the Keycloak documentation for more information).

Use the Keycloak token to access the REST API

With the token generated in the previous step, we can test a request to the Konfuzio API;

curl --request GET \

--url https://app.konfuzio.com/api/v3/auth/me/ \

--header 'Authorization: Bearer mytoken'

Change the variables accordingly:

app.konfuzio.comshould be replaced with your Konfuzio installation URL.mytokenshould be replaced with theaccess_tokentoken field returned by Keycloak.

If everything is configured correctly, the request should return information about the user whose credentials were used to generate the token:

{

"username": "myaccount@konfuzio.com"

}

You can use the token to access any other endpoint of the Konfuzio REST API with the same user permissions as the user whose credentials were used to generate the token.

SSO via other Identity Management software

In order to connect your Identity Management software with Konfuzio using Single Sign-On (SSO), you’ll need to follow these steps. Please note, this tutorial is meant for Identity Management software that supports OIDC (OpenID Connect), a widely adopted standard for user authentication that’s compatible with many software platforms. If you’re using Keycloak, please refer to the separate tutorial on connecting Konfuzio with Keycloak.

Step 1: Setting up your Identity Provider

This step varies depending on the Identity Management software you’re using. You should refer to your Identity Management software’s documentation for setting up OIDC (OpenID Connect). During the setup, you will create a new OIDC client for Konfuzio. You will typically need to provide a client name, redirect URI, and optionally a logo.

The redirect URI should be the URL of your Konfuzio instance followed by “/oidc/callback/”, for example https://

At the end of this step, you should have the following information:

Client ID

Client Secret

OIDC Issuer URL

Step 2: Configuring mozilla-django-oidc

You’ll need to set the following environment variables:

OIDC_RP_CLIENT_ID: This should be the client ID from your Identity Management software.OIDC_RP_CLIENT_SECRET: This should be the client secret from your Identity Management software.OIDC_OP_AUTHORIZATION_ENDPOINT: This should be your OIDC issuer URL followed by “/authorize”.OIDC_OP_TOKEN_ENDPOINT: This should be your OIDC issuer URL followed by “/token”.OIDC_OP_USER_ENDPOINT: This should be your OIDC issuer URL followed by “/userinfo”.OIDC_OP_JWKS_ENDPOINT: This should be your OIDC issuer URL followed by “/.well-known/jwks.json”.OIDC_RP_SIGN_ALGO: This is the signing algorithm your Identity Management software uses. This is typically “RS256”.OIDC_RP_SCOPES: These are the scopes to request. For basic authentication, this can just be “openid email”.

Step 3: Restarting Konfuzio

After you have set these environment variables, you should restart your Konfuzio server so that the new settings can take effect. How you do this depends on how you have deployed Konfuzio, but it might involve restarting a Docker container or a Django server.

Step 4: Testing

Now you can go to your Konfuzio instance and you should be redirected to your Identity Management software login screen.

Migrate AIs and Projects

Overview of Migration Methods

This table shows the two migration methods, “Project Export” and “AI File,” for moving various elements Projects and Konfuzio Server environments. The table includes a list of elements such as Annotations, Categories, Label Sets, Labels, and more, and indicates which method is applicable for each element. The “Project Export” method is used for exporting whole Projects, while the “AI File” method is used for exporting elements that are specifically relevant for just running the AI on a different Konfuzio Server environments.

Evaluation |

|||

|---|---|---|---|

Yes |

No |

No |

|

Yes |

No |

No |

|

Yes |

Yes |

No |

|

Yes |

Yes |

No |

|

Yes |

Yes |

No |

|

No |

No |

No |

|

Yes |

No |

No |

|

Yes |

No |

No |

|

Yes |

No |

No |

|

No |

No |

No |

Migrate an Extraction or Categorization AI

Superusers can migrate Extraction and Categorization AIs via the webinterface. This is explained on https://help.konfuzio.com.

Migrate a Project

Export the Project data from the source Konfuzio Server system.

pip install konfuzio_sdk

# The "init" command will ask you to enter a username, password and hostname to connect to Konfuzio Server.

# Please enter the hostname without trailing backslash. For example "https://app.konfuzio.com" instead of "https://app.konfuzio.com/".

# The init commands creates a .env in the current directory. To reset the SDK connection, you can delete this file.

konfuzio_sdk init

konfuzio_sdk export_project <PROJECT_ID>

The export will be saved in a folder with the name data_

python manage.py project_import "/konfuzio-target-system/data_123/" "NewProjectName"

Alternatively, you can merge the Project export into an existing Project.

python manage.py project_import "/konfuzio-target-system/data_123/" --merge_project_id <EXISTING_PROJECT_ID>

Database and Storage

Overview

To run Konfuzio Server, three types of storages are required.

First, a database is needed to store structured application data. We recommend using PostgreSQL if you’re starting from scratch, but we also support Oracle 19+ for customers who already have an Oracle database.

Secondly, a storage for Blob needs to be present.

Thirdly, a Redis database that manages the background Task of Konfuzio Server is needed.

You can choose your preferred deployment option for each storage type and connect Konfuzio via environment variables to the respective storages. We recommend planning your storage choices before starting with the actual Konfuzio installation.

Storage Name |

Recommended Version |

Supported Version |

Deployment Options |

|---|---|---|---|

Latest Stable |

PostgreSQL 12 and higher |

Managed (Cloud) Service, VM Installation, Docker, In-Cluster* |

|

Latest Stable |

Oracle 19 and higher |

VM Installation, Docker |

|

Latest Stable |

Redis 6 and higher |

Managed (Cloud) Service, VM Installation, Docker, In-Cluster* |

|

Blob Storage |

Latest Stable |

All with activ support |

Filesystem, S3-compatible Service (e.g. Amazon S3, Azure Blob Storage), In-Cluster* S3 via MinIO |

*If you use Kubernetes Deployment you can choose the ‘in-Cluster’ option for Postgres, Redis and S3-Storage.

Usage of database

Konfuzio Server will create a total of 43 tables and use the following data types. This information refers to release 2022-10-28-07-23-39.

data_type |

count |

|---|---|

bigint |

6 |

boolean |

35 |

character varying |

78 |

date |

2 |

double precision |

29 |

inet |

2 |

integer |

138 |

jsonb |

28 |

smallint |

2 |

text |

21 |

timestamp with time zone |

56 |

uuid |

1 |

Configuring PostgreSQL

You can use any version of PostgreSQL 12 or higher. We recommend using the latest stable version.

Depending on your configuration, you might have a PostgreSQL database as part of your Kubernetes cluster, or in a Docker container, on on a separate host/VM. You can refer to the instructions for your specific setup to quickly spin up a new PostgreSQL database.

Once you have an address that your database routes to, you can set the DATABASE_URL environment variable to the connection string of your PostgreSQL database. For example:

DATABASE_URL=postgres://user:password@host:port/database

No further setup is needed. The Konfuzio Server will automatically create the required tables and columns during the `migration <>`_ step.

Configuring Oracle

You can use any version of Oracle 19 or higher. We recommend using the latest stable version.

Depending on your configuration, you might have an Oracle database as part of your Kubernetes cluster, or in a Docker container, on on a separate host/VM. As Oracle cannot be freely distributed, you will need to provide the database yourself.

Once you have a database instance your Konfuzio installation can connect to, you can set the DATABASE_URL environment variable to the connection string of your Oracle database, whose format depends on your setup. For example:

DATABASE_URL=oracle://user:password@host:1521/database # with a SID

DATABASE_URL=oracle://user:password@/localhost:1521/orclpdb1 # with an Easy Connect string

DATABASE_URL=oracle://user:password@/(DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=localhost)(PORT=1521))(CONNECT_DATA=(SERVICE_NAME=orclpdb1))) # with a full DSN string

No further setup is needed. The Konfuzio Server will automatically create the required tables and columns during the `migration <>`_ step.

Environment Variables

Environment Variables for Konfuzio Server

General Settings

General settings for the Server.

SECRET_KEY

- Type:

str- Required:

Yes

- Description:

Insert a random secret key. See docs.

SECURITY WARNING: keep the secret key used in production secret!

HOST_NAME

- Type:

str- Required:

Yes

- Description:

Used in the E-Mail templates:

https://example.konfuzio.comorhttp://localhost:8000for local development.

Note: Please include the protocol (e.g. http://) even if the variable is namedHOST_NAME.

DEBUG

- Type:

bool- Default value:

False- Description:

Use

Falsefor production andTruefor local development. See docs.

SECURITY WARNING: don’t run with debug turned on in production!

ALLOWED_HOSTS

- Type:

str- Default value:

DEFAULT_ALLOWED_HOST- Description:

A list of strings representing the host/domain names that Konfuzio can serve. This is a security measure to prevent HTTP Host header attacks, which are possible even under many seemingly-safe web server configurations. See docs.

BILLING_DEFAULT_CONTRACT_ID

- Type:

str- Default value:

'K0'- Description:

The Billing contract ID for this Konfuzio Server instance.

BILLING_API_KEY

- Type:

str- Default value:

''- Description:

The Billing API to connect with the Konfuzio License Server. See docs.

DATABASE_URL

- Type:

str- Required:

Yes

- Description:

Please enter a Postgres Database in the format of

postgres://USER:PASSWORD@HOST:PORT/NAME. See docs.

ALLOW_PUBLIC_DOCUMENTS

- Type:

bool- Default value:

True- Description:

Decide if Documents can be marked as public.

DATABASE_URL_SSL_REQUIRE

- Type:

bool- Default value:

False- Description:

Specify whether the database connection requires SSL.

FLOWER_URL

- Type:

str- Default value:

None- Description:

The URL to the Flower instance, if present. See docs.

DETECTRON_URL

- Type:

str- Default value:

None- Description:

This is used to connect to the optional Document Layout Analysis Container. This is a URL in the form of

http://detectron-service:8181/predict. You might need to adjust thedetectron-serviceto your service name or IP.

SUMMARIZATION_URL

- Type:

str- Default value:

None- Description:

This is used to connect to the optional Summarization Container. This is a URL in the form of

http://summarization-service:8181/predict. You might need to adjustsummarization-serviceto your service name or IP.

CSV_EXPORT_ROW_LIMIT

- Type:

int- Default value:

100- Description:

The (approximate) number of rows that an exported CSV file of extraction results can include. Set to 0 for no limit, but beware of timeouts as the CSV files are exported synchronously.

HARD_DELETE_DAYS_LIMIT

- Type:

int- Default value:

30- Description:

The number of days after which Documents and Projects are permanently deleted. Soft-deleted Documents and Projects can be restored within this timeframe.

ACCOUNT_EMAIL_VERIFICATION

- Type:

str- Default value:

'mandatory'- Description:

Determines the email verification method during signup – choose one of “mandatory”, “optional”, or “none”. When set to “mandatory”, the user is blocked from logging in until the email address is verified. Choose “optional” or “none” to allow logins with an unverified email address. In case of “optional”, the email verification mail is still sent, whereas in case of “none” no email verification mails are sent.

ACCOUNT_MEMBER_CONFIRMATION

- Type:

str- Default value:

'mandatory'- Description:

When set to “mandatory”, invitation to become a Project Member must be accepted by the invited User. Choose “optional” or “none” to auto-accept Invitations to a Project immediately.

ACCOUNT_BLOCK_FREE_EMAIL_PROVIDERS

- Type:

bool- Default value:

True- Description:

Block registration for users with an email address from a free provider according to the blocklist.

ACCOUNT_BLOCK_DOUBLE_SUB_DOMAIN

- Type:

bool- Default value:

True- Description:

Block emails which have double sub-domains (like: foo@bar.foo.bar but not foo@bar.com).

MAINTENANCE_MODE

- Type:

bool- Default value:

False- Description:

Set maintenance mode. When maintenance mode is enabled, all pages return a 503 error.

KONFUZIO_CACHE_DIR

- Type:

str- Default value:

None- Description:

The temporary directory where the AI training process writes files.

ALWAYS_GENERATE_SANDWICH_PDF

- Type:

bool- Default value:

False- Description:

Turn on/off the immediate generation of sandwich PDFs in a full Document workflow.

Background Tasks via Celery

Settings related to background tasks.

BROKER_URL

- Type:

str- Required:

Yes

- Description:

Enter a Redis database. See docs.

RESULT_BACKEND

- Type:

str- Required:

Yes

- Description:

Enter a Redis database. See docs.

TASK_ALWAYS_EAGER

- Type:

bool- Default value:

False- Description:

When this is True, all background processes are executed in synchronously. This will block the web interface until all tasks have finished. Do not use this in production. See docs.

WORKER_MAX_TASKS_PER_CHILD

- Type:

int- Default value:

50- Description:

Determine the amount of tasks after which a worker process is replaced. This is used to mitigate memory leaks.

WORKER_SEND_TASK_EVENTS

- Type:

bool- Default value:

False- Description:

See docs.

TASK_SEND_SENT_EVENT

- Type:

bool- Default value:

True- Description:

See docs.

TASK_TRACK_STARTED

- Type:

bool- Default value:

True- Description:

See docs.

WORKER_MAX_MEMORY_PER_CHILD

- Type:

int- Default value:

100000- Description:

Determine the amount of allocated memory after which a worker process is replaced. This is used to mitigate memory leaks.

TASK_ACKS_ON_FAILURE_OR_TIMEOUT

- Type:

bool- Default value:

True- Description:

See docs.

TASK_ACKS_LATE

- Type:

bool- Default value:

True- Description:

See docs.

BROKER_MASTER_NAME

- Type:

str- Default value:

None- Description:

The name of the Broker Master when using Redis Sentinel. See docs.

RESULT_BACKEND_MASTER_NAME

- Type:

str- Default value:

None- Description:

The name of the Result Backend Master when using Redis Sentinel. See docs.

THRESHOLD_FOR_HEAVY_TRAINING

- Type:

int- Default value:

150- Description:

The number of Document in a project’s dataset that trigger a “heavy training”. Heavy training are executed via a dedicated queue instead of the default shared queue. A

training_heavyworker must be running in order to complete these trainings.

Blob Storage Settings

DEFAULT_FILE_STORAGE

- Type:

str- Default value:

'django.core.files.storage.FileSystemStorage'- Description:

By default, the file system is used as file storage. To use an Azure Storage Account, set to

storage.MultiAzureStorage. To use an S3-compatible storage, set tostorage.MultiS3Boto3Storage. See docs.

AZURE_ACCOUNT_NAME

- Type:

str- Required:

Yes

- Description:

The Azure account name. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiAzureStorage.

AZURE_ACCOUNT_KEY

- Type:

str- Required:

Yes

- Description:

The Azure account key. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiAzureStorage.

AZURE_CONTAINER

- Type:

str- Required:

Yes

- Description:

The name of the Azure storage container. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiAzureStorage.

AWS_ACCESS_KEY_ID

- Type:

str- Required:

Yes

- Description:

The access key of the S3 service. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_SECRET_ACCESS_KEY

- Type:

str- Required:

Yes

- Description:

The secret key of the S3 service. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_STORAGE_BUCKET_NAME

- Type:

str- Required:

Yes

- Description:

The name of the bucket in the S3 service. This is mandatory if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_S3_REGION_NAME

- Type:

str- Default value:

None- Description:

The region name of the S3 service. Used only if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_S3_ENDPOINT_URL

- Type:

str- Default value:

None- Description:

Custom S3 URL to use when connecting to S3, including scheme. Used only if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_S3_USE_SSL

- Type:

bool- Default value:

True- Description:

Whether to use SSL when connecting to S3. This is passed to the boto3 session resource constructor. Used only if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

AWS_S3_VERIFY

- Type:

str- Default value:

None- Description:

Whether to verify SSL certificates when connecting to S3. Can be set to False to not verify certificates or a path to a CA cert bundle. Used only if

DEFAULT_FILE_STORAGEis set tostorage.MultiS3Boto3Storage.

Email Sending Settings

SENDGRID_API_KEY

- Type:

str- Default value:

None- Description:

API key to authenticate with Sendgrid for sending emails. Only needed if

EMAIL_BACKENDis set tosendgrid_backend.SendgridBackend.

EMAIL_BACKEND

- Type:

str- Default value:

'django.core.mail.backends.smtp.EmailBackend'- Description:

The SMTP backend for sending e-mails. See docs.

EMAIL_HOST

- Type:

str- Default value:

'localhost'- Description:

The host to use for sending e-mails.

EMAIL_HOST_PASSWORD

- Type:

str- Default value:

''- Description:

Password to use for the SMTP server defined in

EMAIL_HOST. This setting is used in conjunction withEMAIL_HOST_USERwhen authenticating to the SMTP server. If either of these settings is empty, we won’t attempt authentication.

EMAIL_HOST_USER

- Type:

str- Default value:

''- Description:

Username to use for the SMTP server defined in

EMAIL_HOST. This setting is used in conjunction withEMAIL_HOST_PASSWORDwhen authenticating to the SMTP server. If either of these settings is empty, we won’t attempt authentication.

EMAIL_PORT

- Type:

int- Default value:

25- Description:

Port to use for the SMTP server defined in

EMAIL_HOST. See docs.

DEFAULT_FROM_EMAIL

- Type:

str- Default value:

'Konfuzio.com <support@konfuzio.net>'- Description:

Specifies the email address to use as the “from” address when sending emails.

EMAIL_USE_TLS

- Type:

bool- Default value:

False- Description:

See docs.

EMAIL_USE_SSL

- Type:

bool- Default value:

False- Description:

See docs.

Email Polling Settings

SCAN_EMAIL_HOST

- Type:

str- Default value:

''- Description:

The SMTP host to use for polling emails.

SCAN_EMAIL_HOST_PASSWORD

- Type:

str- Default value:

''- Description:

SMTP password to use for the email server defined in

SCAN_EMAIL_HOST. This setting is used in conjunction withSCAN_EMAIL_HOST_USERwhen authenticating to the email server.

SCAN_EMAIL_HOST_USER

- Type:

str- Default value:

''- Description:

SMTP username to use for the email server defined in

SCAN_EMAIL_HOST. This setting is used in conjunction withSCAN_EMAIL_HOST_PASSWORDwhen authenticating to the email server.

SCAN_EMAIL_RECIPIENT

- Type:

str- Default value:

''- Description:

Recipient email address to be polled. Emails sent to this address will be polled into Konfuzio.

SCAN_EMAIL_SLEEP_TIME

- Type:

int- Default value:

60- Description:

The polling interval between email scans, in seconds.

Time limits for background tasks

More info about backgrounds tasks and their defaults can be viewed here.

DOCUMENT_OCR_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

Maximum time limit for the OCR of a document, in seconds.

PAGE_OCR_TIME_LIMIT

- Type:

int- Default value:

600- Description:

Maximum time limit for the OCR of a page, in seconds.

EXTRACTION_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

Maximum time limit for the extraction of a document, in seconds.

CATEGORIZATION_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

Maximum time limit for the categorization of a document, in seconds.

EVALUATION_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

Maximum time limit for the evaluation of an AI model, in seconds.

TRAINING_EXTRACTION_TIME_LIMIT

- Type:

int- Default value:

72000- Description:

Maximum time limit for the training of an extraction AI model, in seconds.

TRAINING_CATEGORIZATION_TIME_LIMIT

- Type:

int- Default value:

72000- Description:

Maximum time limit for the training of a categorization AI model, in seconds.

SANDWICH_PDF_TIME_LIMIT

- Type:

int- Default value:

1800- Description:

Maximum time limit for generating a sandwich PDF file, in seconds.

DOCUMENT_TEXT_AND_BBOXES_TIME_LIMIT

- Type:

int- Default value:

180- Description:

Maximum time limit for generating a Document’s text and bounding boxes, in seconds.

CLEAN_DELETED_DOCUMENT_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

The maximum time for hard deleting Documents that are marked for deletion, in seconds. See docs.

CLEAN_DOCUMENT_WITHOUT_DATASET_TIME_LIMIT

- Type:

int- Default value:

3600- Description:

The maximum time for hard deleting Documents that are not linked to a Dataset, in seconds. See docs.

DOCUMENT_WORKFLOW_TIME_LIMIT

- Type:

int- Default value:

complex_expression- Description:

The maximum time for the whole Document workflow in seconds. If a Document workflow does not complete within this time, the Document is set to an error state.

SNAPSHOT_CREATION_TIME_LIMIT

- Type:

int- Default value:

complex_expression- Description:

The maximum time for the creation of a Snapshot, in seconds.

SNAPSHOT_RESTORE_TIME_LIMIT

- Type:

int- Default value:

complex_expression- Description:

The maximum time for the restoration of a Snapshot, in seconds.

Keycloak / SSO Settings

The following values establish a Keycloak connection through the Mozilla OIDC package.

SSO_ENABLED

- Type:

bool- Default value:

False- Description:

Set to

Trueto activate SSO login via Keycloak.

PASSWORD_LOGIN_ENABLED

- Type:

bool- Default value:

True- Description:

Set to

Trueto activate password-based login and signup.

KEYCLOAK_URL

- Type:

str- Default value:

'http://127.0.0.1:8080/'- Description:

If you use Keycloak version 17 and later set this URL like:

http(s)://{keycloak_address}:{port}/. If you use Keycloak version 16 and earlier set this URL like:http(s)://{keycloak_address}:{port}/auth/.

KEYCLOAK_REALM

- Type:

str- Default value:

'master'- Description:

The Keycloak realm to be used for authentication.

SYNCHRONIZE_KEYCLOAK_GROUPS_WITH_KONFUZIO_GROUPS

- Type:

bool- Default value:

False- Description:

Determine whether to synchronize Keycloak groups with Konfuzio groups. See docs.

DEFAULT_GROUPS

- Type:

str- Default value:

'Default'- Description:

The name of the Groups that will be added to every User by default. To assign multiple Groups use a comma-separated list.

KEYCLOAK_SUPERUSER_GROUP_NAME

- Type:

str- Default value:

'Superuser'- Description:

The name of the Keycloak group that is mapped to superuser status on Konfuzio. See docs.

OIDC_RP_SIGN_ALGO

- Type:

str- Default value:

'RS256'- Description:

Sets the algorithm the IdP uses to sign ID tokens. See docs.

OIDC_RP_CLIENT_ID

- Type:

str- Default value:

None- Description:

The OIDC Client ID. For Keycloak client creation see: docs.

OIDC_RP_CLIENT_SECRET

- Type:

str- Default value:

None- Description:

The OIDC Client Secret. See docs.

OIDC_USERNAME_CLAIM

- Type:

str- Default value:

'email'- Description:

The OIDC username claim.

OIDC_USER_FILTER_CLAIM

- Type:

str- Default value:

'username'- Description:

The OIDC claim that identifies a User. This claim is used to uniquely identify a User. Choices are “email”, “username” and “customer”. If this claim changes for a User a new User object will be created.

OIDC_EMAIL_CLAIM

- Type:

str- Default value:

'email'- Description:

The OIDC email claim. This claim is used to get a Users email.

OIDC_GROUP_CLAIM

- Type:

str- Default value:

'groups'- Description:

The OIDC group claim. This claim is used to sync a User with Konfuzio Groups.

OIDC_FIRST_NAME_CLAIM

- Type:

str- Default value:

'firstName'- Description:

The OIDC first name claim. This claim is used to set the first_name attribute of a user.

OIDC_LAST_NAME_CLAIM

- Type:

str- Default value:

'lastName'- Description:

The OIDC last name claim. This claim is used to set the last_name attribute of a user.

OIDC_CUSTOMER_ID_CLAIM

- Type:

str- Default value:

'customerId'- Description:

The OIDC Customer claim. This claim is used to identify the Customer objects in the billing module.

OIDC_CONTRACT_ID_CLAIM

- Type:

str- Default value:

'contractId'- Description:

The OIDC Customer claim. This claim is used to identify the Customer objects in the billing module.

OIDC_USE_PKCE

- Type:

bool- Default value:

True- Description:

Whether the authentication backend uses PKCE (Proof Key For Code Exchange) during the authorization code flow.

OIDC_PKCE_CODE_CHALLENGE_METHOD

- Type:

str- Default value:

'S256'- Description:

Sets the method used to generate the PKCE code challenge.

OIDC_VERIFY_SSL

- Type:

bool- Default value:

True- Description:

Whether the authentication backend should verify the SSL certificate of the OIDC provider.

OIDC_RP_SCOPES

- Type:

str- Default value:

'openid email'- Description:

The OpenID Connect scopes to request during login.

OIDC_RENEW_ID_TOKEN_EXPIRY_SECONDS

- Type:

int- Default value:

complex_expression- Description:

The time in seconds that it takes for an OIDC ID token to expire.

Snapshot Settings

Settings for creating and restoring snapshots.

SNAPSHOT_DEFAULT_FILE_STORAGE

- Type:

str- Default value:

'django.core.files.storage.FileSystemStorage'- Description:

By default, the file system is used as file storage. To use an S3-compatible storage set:

storages.backends.s3boto.S3BotoStorage. To use an Azure Blob Storage set:storages.backends.azure_storage.AzureStorage. See docs.

SNAPSHOT_HOST_NAME

- Type:

str- Default value:

HOST_NAME- Description:

This allows to set a different hostname which is used to make API calls when creating a Snapshot.

SNAPSHOT_RESTORE_ACROSS_ENVIRONMENTS

- Type:

bool- Default value:

False- Description:

Whether or not Snapshots from other environments can be restored.

SNAPSHOT_AWS_ACCESS_KEY_ID

- Type:

str- Required:

Yes

- Description:

The access key of the S3 service for Snapshots. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.s3boto.S3BotoStorage.

SNAPSHOT_AWS_SECRET_ACCESS_KEY

- Type:

str- Required:

Yes

- Description:

The secret key of the S3 service for Snapshots. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.s3boto.S3BotoStorage.

SNAPSHOT_AWS_STORAGE_BUCKET_NAME

- Type:

str- Required:

Yes

- Description:

The name of the bucket in the S3 service for Snapshots. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.s3boto.S3BotoStorage.

SNAPSHOT_AWS_S3_REGION_NAME

- Type:

str- Default value:

None- Description:

The region name of the S3 service for Snapshots.

SNAPSHOT_AWS_S3_ENDPOINT_URL

- Type:

str- Default value:

None- Description:

Custom S3 URL to use when connecting to S3 for Snapshots, including scheme.

SNAPSHOT_AWS_S3_USE_SSL

- Type:

bool- Default value:

True- Description:

Whether to use SSL when connecting to S3 for Snapshots. This is passed to the boto3 session resource constructor.

SNAPSHOT_AWS_S3_VERIFY

- Type:

str- Default value:

None- Description:

Whether to verify SSL certificates when connecting to S3 for Snapshots. Can be set to False to not verify certificates or a path to a CA cert bundle.

SNAPSHOT_AZURE_ACCOUNT_NAME

- Type:

str- Required:

Yes

- Description:

The Azure account name. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.azure_storage.

SNAPSHOT_AZURE_ACCOUNT_KEY

- Type:

str- Required:

Yes

- Description:

The Azure account key. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.azure_storage.

SNAPSHOT_AZURE_CONTAINER

- Type:

str- Required:

Yes

- Description:

The name of the Azure storage container. This is mandatory if

SNAPSHOT_DEFAULT_FILE_STORAGEis set tostorages.backends.azure_storage.

SNAPSHOT_LOCATION

- Type:

str- Default value:

None- Description:

The location where all Snapshots are saved (e.g.

/data/konfuzio-snapshots). This is mandatory ifSNAPSHOT_DEFAULT_FILE_STORAGEis set todjango.core.files.storage.FileSystemStorage.

Keycloak Test Settings

These variables are only used for Keycloak integration tests: The admin variables are for login keycloak admin panel, the test variables are for login to Konfuzio server.

KEYCLOAK_ADMIN_USERNAME

- Type:

str- Default value:

'admin'- Description:

The Keycloak admin username.

KEYCLOAK_ADMIN_PASSWORD

- Type:

str- Default value:

'admin'- Description:

The Keycloak admin password.

KEYCLOAK_TEST_USERNAME

- Type:

str- Default value:

'fake@f.com'- Description:

Username for Keycloak integration tests.

KEYCLOAK_TEST_PASSWORD

- Type:

str- Default value:

'pass1234'- Description:

Password for Keycloak integration tests.

System Messages Settings

More information on System Messages can be found here.

SYSTEM_MESSAGES_ENABLED

- Type:

bool- Default value:

False- Description:

Set to

Trueto disable system messages in the interface.

SYSTEM_MESSAGE_OVERLAP_MAX

- Type:

int- Default value:

3- Description:

The maximum number of system messages that can be shown at the same time.

Dockerized AI Settings

Settings for the Dockerized AI feature. More information can be found here.

NEW_PROJECT_DEFAULT_BUILD_MODE

- Type:

int- Default value:

0- Description:

The default AI build mode for new projects. This mode can subsequently be changed on the project settings.

0 = pickle,1 = bento.

NEW_PROJECT_DEFAULT_SERVE_MODE

- Type:

int- Default value:

0- Description:

The default AI serve mode for new projects. This mode can subsequently be changed on the project settings.

0 = pickle,1 = bento.

BENTO_CONTAINER_BUILD_MODE

- Type:

str- Default value:

'local'- Description:

Determines whether bentos are containerized locally (“local”) or by an external Yatai (“remote”) service. For local containerization, the

DOCKER_HOSTenvironment variable must be set to point to the Docker socket. For external containerization,bentoml cloud loginshould first be run to authenticate with the Yatai service.

BENTO_SERVE_MODE

- Type:

str- Default value:

'local'- Description:

Determines whether bentos are spun up locally (“local”) or if deployment is delegated to an external Yatai service (“remote”). Note that setting

ai_model_service_urlmanually on the AiModel instance will override this setting. For local serving, theDOCKER_HOSTenvironment variable must be set to point to the Docker socket.

BENTO_SERVING_REMOTE_KUBE_CONFIG

- Type:

str- Default value:

None- Description:

If

BENTO_SERVE_MODEis set to ‘remote’, you can set the following environment variable to specify where to find the kubeconfig file for the remote Kubernetes cluster. If not set, we will use the “in cluster” configuration.

BENTO_SERVICE_READY_TIMEOUT

- Type:

int- Default value:

600- Description:

Determines how long to wait the Bento services to be ready before timing out.

BENTO_SERVING_SHUTDOWN_TIMEOUT

- Type:

int- Default value:

600- Description:

Determines the amount of seconds after which the serving process (local or remote, i.e. the container) will be shutdown, based on the last request received. This allows the container not to be recreated for every request. If set to 0, the container will be shut down immediately after each request is processed.

DOCKER_HOST

- Type:

str- Default value:

None- Description:

If

BENTO_CONTAINER_MODEorBENTO_SERVE_MODEis set to ‘local’, the DOCKER_HOST environment variable must be set to point to the Docker socket.

DOCKER_NETWORK

- Type:

str- Default value:

None- Description:

If

BENTO_SERVE_MODEis set to ‘local’, you can specify the name of the network to use for the AI models containers. This is necessary if you’re running Konfuzio itself in a Docker container, which should be on the same network of the AI model containers.

Document Validation UI Settings

Settings that govern specific features of the Document Validation UI. Some of these settings mirror those from the DVUI package.

DVUI_HIDE_EMPTY_LABEL_SETS

- Type:

bool- Default value:

False- Description:

Hide Label Sets that have no Labels assigned in the DVUI.

Other Settings

GOOGLE_ANALYTICS

- Type:

str- Default value:

None- Description:

The Google Analytics ID to track the usage of the Konfuzio Server.

TEST_BENTO_WITH_DOCKER

- Type:

bool- Default value:

False- Description:

Return None always.

Example .env file for Konfuzio Server